In our prior course, you learned how the nature of an investigator’s research question dictates the type of study approach and design that might be applied to achieve the study aims. Intervention research typically asks questions related to the outcomes of an intervention effort or approach. However, questions also arise concerning implementation of interventions, separate from understanding their outcomes. Practical, philosophical, and scientific factors contribute to investigators’ intervention study approach and design decisions.

In this chapter you learn:

In our earlier course you became familiar with the ways that research questions lead to research approach and methods. Intervention and evaluation research are not different: the question dictates the approach. In the earlier course, you also became familiar with the philosophical, conceptual and practical aspects of different approaches to social work research: qualitative, quantitative, and mixed methods. These methods are used in research for evaluating practice and understanding interventions, as well. The primary emphasis in this module revolves around quantitative research designs for practice evaluation and understanding interventions. However, taking a few moments to examine qualitative and mixed methods in these applications is worthwhile. Additionally, we introduce forms of participatory research—something we did not discuss regarding efforts to understand social work problems and diverse populations. Participatory research is an approach rich in social work tradition.

The research questions asked by social workers about interventions often lend themselves to qualitative study approaches. Here are 5 examples.

Qualitative approaches often involve in-depth data from relatively few individuals, seeking to understand their individual experiences with an intervention. As such, these study approaches are relatively sensitive to nuanced individual differences—differences in experience that might be attributed to cultural, clinical, or other demographic diversity. This is true, however, only to the extent that diversity is represented among study participants, and individuals cannot be presumed to represent groups or populations.

Many intervention and evaluation research questions are quantitative in nature, leading investigators to adopt quantitative approaches or to integrate quantitative approaches in mixed methods research. In these instances, “how much” or “how many” questions are being asked, questions such as:

Many study designs detailed in Chapter 2 reflect the philosophical roots of quantitative research, particularly those designed to zero in on causal inferences about intervention—the explanatory research designs. Quantitative approaches are also used in descriptive and exploratory intervention and evaluation studies. By nature, quantitative studies tend to aggregate data provided by individuals, and in this way are very different from qualitative studies. Quantitative studies seek to describe what happens “on average” rather than describing individual experiences with the intervention—you learned about central tendency and variation in our earlier course (Module 4). Differences in experience related to demographic, cultural, or clinical diversity might be quantitatively assessed by comparing how the intervention was experienced by different groups (e.g., those who differ on certain demographic or clinical variables). However, data for the groups are treated in the aggregate (across individuals) with quantitative approaches.

Qualitative and quantitative approaches are very helpful in evaluation and intervention research as part of a mixed-methods strategy for investigating the research questions. In addition to the examples previously discussed, integrating qualitative and quantitative approaches in intervention and evaluation research is often done as means of enriching the results derived from one or the other approach. Here are 3 scenarios to consider.

For example, a team of investigators applied a mixed methods approach in evaluating outcomes of an intensive experiential learning experience designed to prepare BSW and MSW students to engage effectively in clinical supervision (Fisher, Simmons, & Allen, 2016). BSW students provided quantitative data in response to an online survey, and MSW students provided qualitative self-assessment data. The quantitative data answered a research question about how students felt about supervision, whereas the qualitative data were analyzed for demonstrated development in critical thinking about clinical issues. The investigators concluded that their experiential learning intervention contributed to the outcomes of forming stronger supervisory alliance, BSW student satisfaction with their supervisor, and MSW students thinking about supervision as being more than an administrative task.

You are familiar with the distinction between cross-sectional and longitudinal study designs from our earlier course. In that course, we looked at these designs in terms of understanding diverse populations, social work problems, and social phenomena. Here we address how the distinction relates to the conduct of research to understand social work interventions.

Practice and program evaluation are important aspects of social work practice. It would be nice if we could simply rely on our own sense of what works and what does not. However, social workers are only human and, as we learned in our earlier course, human memory and decisions are vulnerable to bias. Sources of bias include recency, confirmation, and social desirability biases.

In all three of these forms of bias, the problem is not necessarily intentional, but does result in a lack of sufficient attention to evidence in monitoring one’s practices. For example, relying solely on qualitative comments volunteered by consumers (anecdotal evidence) is subject to a selection bias—individuals with strong opinions or a desire to support the social workers who helped them are more likely to volunteer than the general population of those served.

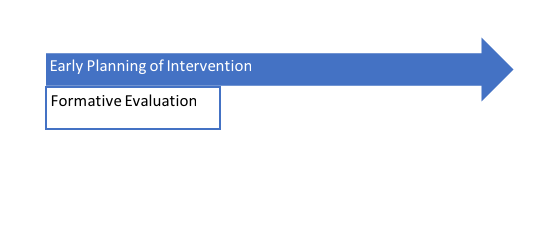

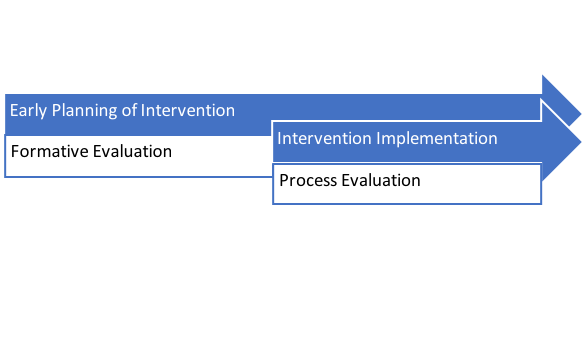

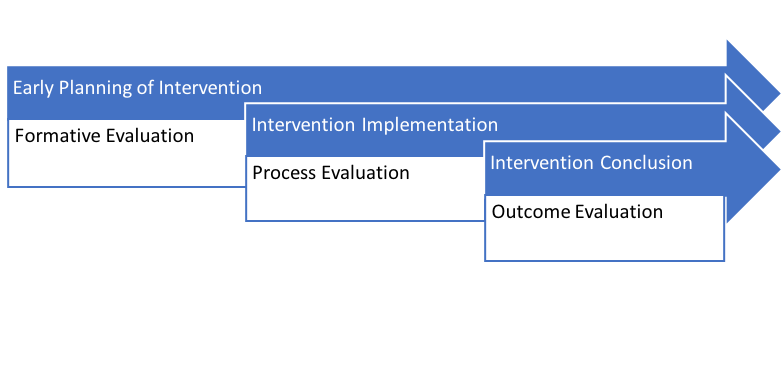

Thus, it is incumbent on social work professionals to engage in practice evaluation that is as free of bias as possible. The choice of systematic evaluation approach is dictated by the evaluation research question being asked. According to the Centers for Disease Control and Prevention (CDC), there are four most common types of intervention or program evaluation: formative, process, outcome, and impact evaluation (https://www.cdc.gov/std/Program/pupestd/Types%20of%20Evaluation.pdf). Here, we consider these as three types, combining impact and outcome evaluation into a single category, and we consider an additional category, as well: cost evaluation.

Formative evaluation is emphasized during the early stages of developing or implementing a social work intervention, as well as following process or outcome evaluation as changes to a program or intervention strategy are considered. The aim of formative evaluation is to understand the context of an intervention, define the intervention, and evaluate feasibility of adopting a proposed intervention or change in the intervention (Trochim & Donnelly, 2007). For example, a needs assessment might be conducted to determine whether the intervention or program is needed, calculate how large the unmet need is, and/or specify where/for whom the unmet need exists. Needs assessment might also include conducting an inventory of services that exist to meet the identified need and where/why a gap exists (Engel & Schutt, 2013). Formative evaluation is used to help shape an intervention, program, or policy.

Investigating how an intervention is delivered or a program operates is the purpose behind process evaluation (Engel & Schutt, 2013). The concept of intervention fidelity was previously introduced. Fidelity is a major point of process evaluation but is not the only point. We know that the greater the degree of fidelity in delivery of an intervention, the more applicable the previous evidence about that intervention becomes in reliably predicting intervention outcomes. As fidelity in the intervention’s delivery drifts or wanes, previous evidence becomes less reliable and less useful in making practice decisions. Addressing this important issue is why many interventions with an evidence base supporting their adoption are manualized, providing detailed manuals for how to implement the intervention with fidelity and integrity. For example, the Parent-Child Interaction Therapy for Traumatized Children (PCIT-TC) treatment protocol is manualized and training certification is available for practitioners to learn the evidence-based skills involved (https://pcit.ucdavis.edu/). This strategy increases practitioners’ adherence to the protocol.

Process evaluation, sometimes called implementation evaluation and sometimes referred to as program monitoring, helps investigators determine the extent to which fidelity has been preserved. But, process evaluation serves other purposes, as well. For example, according to King, Morris and Fitz-Gibbon (1987), process evaluation helps:

Process evaluation also helps investigators determine where the facilitators and barriers to implementing an intervention might operate and can help interpret outcomes/results from the intervention, as well. Process evaluation efforts addresses the following:

For these reasons, many authors consider process evaluation to be a type of formative evaluation.

The aim of outcome or impact evaluation is to determine effects of the intervention. Many authors refer to this as a type of summative evaluation, distinguishing it from formative evaluation: its purpose is to understand the effects of an intervention once it has been delivered. The effects of interest usually include the extent to which intervention goals or objectives were achieved. An important factor to evaluate concerns positive and negative “side effects”—those unintended outcomes associated with the intervention. These might include unintended impact of the intervention participants or impacts on significant others, those delivering the intervention, the program/agency/institutions involved, and others. While impact evaluation, as described by the CDC, is about policy and funding decisions and longer-term changes, we can include it as a form of outcome evaluation since the questions answered are about achieving intervention objectives. Outcome evaluation is based on the elements presented in the logic model created at the outset of intervention planning.

Social workers are frequently faced with efficiency questions related to the interventions we deliver—thus, cost-related evaluation is part of our professional accountability responsibilities. For example, once an agency has applied the evidence-based practice (EBP) process to select the best-fitting program options for addressing an identified practice concern, program planning is enhanced by information concerning which of the options is most cost-effective. Here are some types of questions addressed in cost-related evaluation.

cost analysis: How much does it cost to deliver/implement the intervention with fidelity and integrity? This type of analysis typically analyzes monetary costs, converting inputs into their financial impact (e.g., space resources would be converted into cost per square foot, staffing costs would include salary, training, and benefits costs, materials and technology costs might include depreciation).

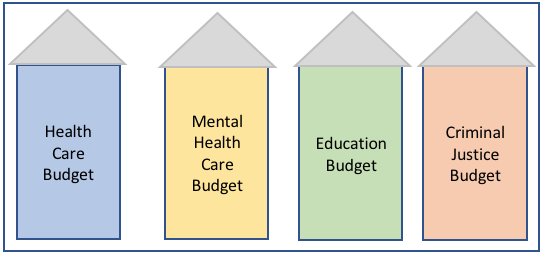

Two of the greatest challenges with these kinds of evaluation are (1) ensuring that all relevant inputs and outputs are included in the analysis, and (2) realistically converting non-monetary costs and benefits into monetary units to standardize comparisons. An additional challenge has to do with budget structures: the gains might be realized in a different budget than where the costs are borne. For example, implementing a mental health or substance misuse treatment program in jails and prisons costs those facilities; the benefits are realized in budgets outside those facilities—schools, workplaces, medical facilities, family services, and mental health programs in the community. Thus, it is challenging to make decisions based on these analyses when constituents are situated in different systems operating with “siloed” budgets where there is little or no sharing across systems.

An intervention or evaluation effort does not necessarily need to be limited to one types. As in the case of mixed-methods approaches, it is sometimes helpful to engage in multiple evaluation efforts with a single intervention or program. A team of investigators described how they used formative, process, and outcome evaluation all in the pursuit of understanding a single preventive public health intervention called VERB, designed to increase physical activity among youth (Berkowitz et al., 2008). Their formative evaluation efforts allowed the team to assess the intervention’s appropriateness for the target audience and to test different messages. The process evaluation addressed fidelity of the intervention during implementation. And, the outcome evaluation led the team to draw conclusions concerning the intervention’s effects on the target audience. The various forms of evaluation utilized qualitative and quantitative approaches.

One contrasts previously noted between qualitative and quantitative research is the nature of the investigator’s role. Every effort is made to minimize investigator influence on the data collection and analysis processes in quantitative research. Qualitative research, on the other hand, recognizes the investigator as an integral part of the research process. Participatory research fits into this latter category.

“Participant observation is a method in which natural social processes are studied as they happen (in the field, rather than in the laboratory) and left relatively undisturbed. It is a means of seeing the social world as the research subjects see it, in its totality, and of understanding subjects’ interpretations of that world” (Engel & Schutt, 2013, p. 276).

This quote describes naturalistic observation very well. The difference with participatory observation is that the investigator is embedded in the group, neighborhood, community, institution, or other entity under study. Participatory observation is one approach used by anthropologists to understand cultures from an embedded rather than outsider perspective. For example, this is how Jane Goodall learned about chimpanzee culture in Tanzania: she became accepted as part of the group she observed, allowing her to describe the members’ behaviors and social relationships, her own experiences as a member of the group, and the theories she derived from 55 years of this work. In social work, the participant approach may be used to answer the research questions of the type we explored in our earlier course: understanding diverse populations, social work problems, or social phenomena. The investigator might be a natural member of the group, where the role as group member precedes the role as observer. This is where the term indigenous membership applies: naturally belonging to the group. (The term “indigenous people” describes the native, naturally occurring inhabitants of a place or region.) It is sometimes difficult to determine how the indigenous member’s observations and conclusions might be influenced by his or her position within the group—for example, the experience might be different for men and women, members of different ages, or leaders. Thus, the conclusions need to be confirmed by a diverse membership.

Participant observers are sometimes “adopted” members of the group, where the role of observer precedes their role as group member. It is somewhat more difficult to determine if evidence collected under these circumstances reflects a fully accurate description of the members’ experience unless the evidence and conclusions have been cross-checked by the group’s indigenous members. Turning back to our example with Jane Goodall, she was accepted into the chimpanzee troop in many ways, but not in others—she could not experience being a birth mother to members of the group, for example.

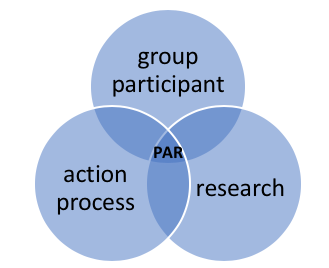

Sometimes investigators are more actively engaged in the life of the group being observed. As previously noted, participant observation is about the processes being left relatively undisturbed (Engel & Schutt, 2013, p. 276). However, participant observers might be more actively engaged in change efforts, documenting the change process from “inside” the group promoting change. These instances are called participatory action research (PAR), where the investigator is an embedded member of the group, joining them in making a concerted effort to influence change. PAR involves three intersecting roles: participation in the group, engaging with the action process (planning and implementing interventions), and conducting research about the group’s action process (see Figure 2-1, adapted from Chevalier & Buckles, 2013, p. 10).

Figure 2-1. Venn diagram of participatory action research roles.

For example, Pyles (2015) described the experience of engaging in participatory action research with rural organizations and rural disaster survivors in Haiti following the January 12, 2010 earthquake. The PAR aimed to promote local organizations’ capacity to engage in education and advocacy and to secure much-needed resources for their rural communities (Pyles, 2015, p. 630). According to the author, rural Haitian communities have a history of experience with exploitative research where outsiders conduct investigations without the input or participation of community members, and where little or no capacity-building action occurs based on study results and recommendations. Pyles also raised the point that, “there are multiple barriers impeding the participation of marginalized people” in community building efforts, making PAR approaches even more important for these groups (2015, p. 634).

The term community-based participatory research (CBPR) refers to collaborative partnerships between members of a community (e.g., a group, neighborhood, or organization) and researchers throughout the entire research process. CBPR partners (internal and external members) all contribute their expertise to the process, throughout the process, and share in all steps of decision-making. Stakeholder members of the community (or organization) are involved as active, equal partners in the research process, co-learning by all members of the collaboration is emphasized, and it represents a strengths-focused approach (Harris, 2010; Holkup, Tripp-Reier, Salois, & Weinert, 2004). CBPR is relevant in our efforts to understand social work interventions since the process can result in interventions that are culturally appropriate, feasible, acceptable, and applicable for the community since they emerged from within that community. Furthermore, it is a community empowerment approach whereby self-determination plays a key role and the community is left with new skills for self-study, evaluation, and understanding the change process (Harris, 2010). These characteristics of CBPR help define the approach.

(a) recognizing the community as a unit of identity,

(b) building on the strengths and resources of the community,

(c) promoting colearning among research partners,

(d) achieving a balance between research and action that mutually benefits both science and the community,

(e) emphasizing the relevance of community-defined problems,

(f) employing a cyclical and iterative process to develop and maintain community/ research partnerships,

(g) disseminating knowledge gained from the CBPR project to and by all involved partners, and

(h) requiring long-term commitment on the part of all partners (Holkup, Tripp-Reier, Salois, & Weinert, 2004, p. 2).

Quinn et al (2017) published a case study of CBPR practices being employed with youth at risk of homelessness and exposure to violence. The authors cited a “paucity of evidence-based, developmentally appropriate interventions” to address the mental health needs of youth exposed to violence (p. 3). The CBPR process helped determine the acceptability of a person-centered trauma therapy approach called narrative exposure therapy (NET). The results of three pilot projects combined to inform the design of a randomized controlled trial (RCT) to study the impact of the NET intervention. The three pilot projects engaged researchers and members of the population to be served (youth at risk of homelessness and exposure to violence). The authors of the case study article discussed some of the challenges of working with youth in the CBPR process and research process. Adapted from Quinn et al (2017), these included:

Awareness of these challenges can help CBPR teams develop solutions to overcome the barriers. The CBPR process, while time and resource intensive, can result in appropriate intervention designs for under-served populations where existing evidence is not available to guide intervention planning.

A somewhat different approach engages members of the community as consultants regarding interventions with which they may be engaged, rather than a fully CBPR approach. This adapted consultation approach presents an important option for ensuring that interventions are appropriate and acceptable for serving the community. However, community members are less integrally involved in the action-related aspects of defining and implementing the intervention, or in the conduct of the implementation research. An example of this important community-as-consultant approach involved a series of six focus group sessions conducted with parents, teachers, and school stakeholders discussing teen pregnancy prevention among high-school aged Latino youth (Johnson-Motoyama et al., 2016). The investigating team reported recommendations and requests from these community members concerning the important role played by parents and potential impact of parent education efforts in preventing teen pregnancy within this population. The community members also identified the importance of comprehensive, empowering, tailored programming that addresses self-respect, responsibility, and “realities,” and incorporates peer role models. They concluded that local school communities have an important role to play in planning for interventions that are “responsive to the community’s cultural values, beliefs, and preferences, as well as the school’s capacity and teacher preferences” (p. 513). Thus, the constituencies involved in this project served as consultants rather than CBPR collaborators. However, the resulting intervention plans could be more culturally appropriate and relevant than intervention plans developed by “outsiders” alone.

One main limitation to conducting CBPR work is the immense amount of time and effort involved in developing strong working collaborative relationships—relationships that can stand the test of time. Collaborative relationships are often built from a series of “quick wins” or small successes over time, where the partners learn about each other, learn to trust each other, and learn to work together effectively.

This chapter began with a review of concepts from our earlier course: qualitative, quantitative, mixed-methods, cross-sectional and longitudinal approaches. Expanded content about approach came next: formative, process, outcome, and cost evaluation approaches were connected to the kinds of intervention questions social workers might ask, and participatory research approaches were introduced. Issues of cultural relevance were explored, as well. This discussion of approach leads to an expanded discussion of quantitative study design strategies, which is the topic of our next chapter.

Take a moment to complete the following activity.